ASUS Unveils the Latest ASUS AI POD Featuring NVIDIA GB300 NVL72

Major orders secured as ASUS expands leadership in AI infrastructure solutions

- ASUS AI POD debut at GTC 2025: Next-generation AI infrastructure, featuring the NVIDIA® GB300 NVL72 platform, with significant order placements secured

- Comprehensive AI solutions showcase: Full lineup of AI servers, including models powered by NVIDIA® Blackwell, NVIDIA HGX™ and NVIDIA MGX™

- Enterprise-ready AI deployments: End-to-end AI solutions, from hardware to cloud-based applications, ensuring seamless deployment and efficiency

- Groundbreaking AI supercomputer: ASUS Ascent GX10, powered by NVIDIA® GB10 Grace Blackwell Superchip for AI models up to 200-billion parameters

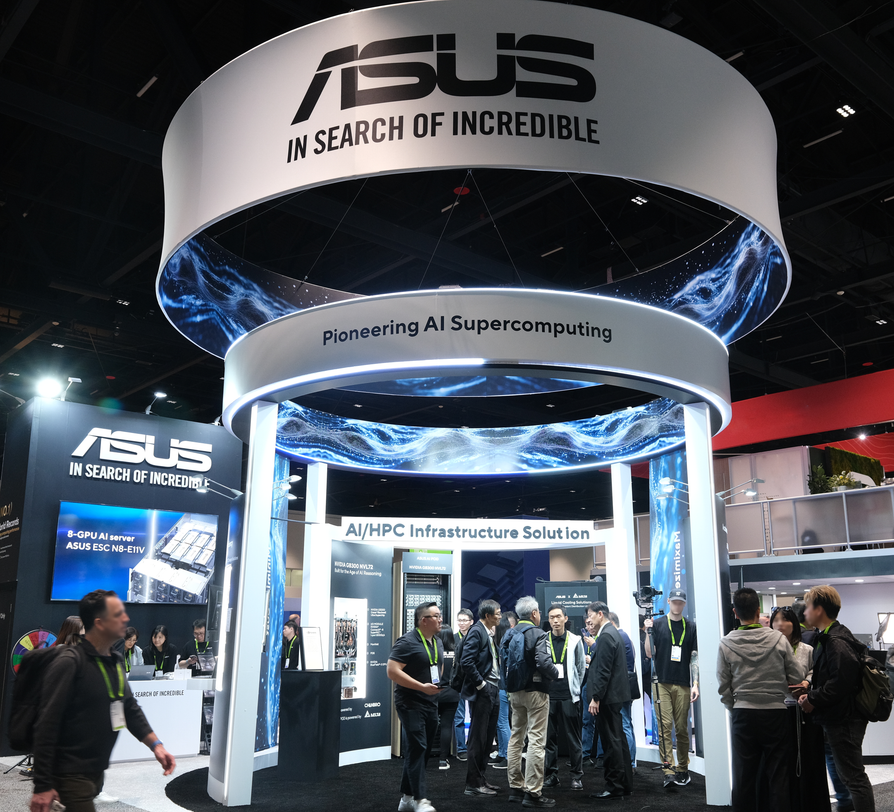

TAIPEI, Taiwan, March 18, 2025 — ASUS today joined GTC 2025 (Booth #1523) as a Diamond sponsor to showcase the latest ASUS AI POD with the NVIDIA® GB300 NVL72 platform. The company is also proud to announce that it has already garnered substantial order placements, marking a significant milestone in the technology industry.

At the forefront of AI innovation, ASUS also presents the latest AI servers in the Blackwell and HGX™ family line-up. These include ASUS XA NB3I-E12 powered by NVIDIA B300 NVL16, ASUS ESC NB8-E11 with NVIDIA DGX B200 8-GPU, ASUS ESC N8-E11V with NVIDIA HGX H200, and ASUS ESC8000A-E13P/ESC8000-E12P supporting NVIDIA RTX PRO 6000 Blackwell Server Edition with MGX™ architecture. With a strong focus on fostering AI adoption across industries, ASUS is positioned to provide comprehensive infrastructure solutions in combination with the NVIDIA AI Enterprise and NVIDIA Omniverse platforms, empowering clients to accelerate their time to market.

ASUS AI POD with NVIDIA GB300 NVL72

By integrating the immense power of the NVIDIA GB300 NVL72 server platform, ASUS AI POD offers exceptional processing capabilities — empowering enterprises to tackle massive AI challenges with ease. Built with NVIDIA Blackwell Ultra, GB300 NVL72 leads the new era of AI with optimized compute, increased memory, and high-performance networking, delivering breakthrough performance.

It’s equipped with 72 NVIDIA Blackwell Ultra GPUs and 36 Grace CPUs in a rack-scale design delivering increased AI FLOPs, providing up to 40TB of high-speed memory per rack. It also includes networking platform integration with NVIDIA Quantum-X800 InfiniBand and Spectrum-X Ethernet, SXM7 and SOCAMM modules designed for serviceability, 100% liquid-cooled design and support for trillion-parameter LLM inference and training with NVIDIA. This launch represents a significant advancement in AI infrastructure, providing customers with a reliable and scalable solution to meet their evolving needs.

ASUS has shown expertise in building NVIDIA GB200 NVL72 infrastructure from the ground up. To achieve peak computing efficiency with software-defined storage architectural paradigm, also on show is ASUS RS501A-E12-RS12U. This powerful SDS server effectively reduces the latency of data training and inferencing, and complements NVIDIA GB200 NVL72. ASUS presents extensive service scope from hardware to cloud-based applications, covering architecture design, advanced cooling solutions, rack installation, large validation/deployment and AI platforms to significantly harness its extensive expertise to empower clients to achieve AI infrastructure excellence and accelerate their time to market.

Kaustubh Sanghani, vice president of GPU products at NVIDIA, commented: "NVIDIA is working with ASUS to drive the next wave of innovation in data centers. Leading ASUS servers combined with the Blackwell Ultra platform will accelerate training and inference, enabling enterprises to unlock new possibilities in areas such as AI reasoning and agentic AI."

GPU servers for heavy generative AI workloads

At GTC 2025, ASUS will also showcase a series of NVIDIA-certified servers, supporting applications and workflows built with the NVIDIA AI Enterprise and Omniverse platforms. These versatile models enable seamless data processing and computation, improving generative AI application performance.

ASUS 10U ESC NB8-E11 is equipped with the NVIDIA Blackwell HGX B200 8-GPU for unmatched AI performance. ASUS XA NB3I-E12 features HGX B300 NVL16, featuring increased AI FLOPS, 2.3TB of HBM3e memory, and networking platform integration with NVIDIA Quantum-X800 InfiniBand and Spectrum-X Ethernet, Blackwell Ultra delivers breakthrough performance for AI reasoning, agentic AI and video inference applications to meet the evolving needs in every data center.

Finally, the 7U ASUS ESC N8-E11V dual-socket server is powered by eight NVIDIA H200 GPUs, supports both air-cooled and liquid-cooled options, and is engineered to provide effective cooling and innovative components — ready to deliver thermal efficiency, scalability and unprecedented performance.

Scalable servers to master AI inference optimization

ASUS also presents server and edge AI options for AI inferencing — the ASUS ESC8000 series embedded with the latest NVIDIA RTX PRO 6000 Blackwell Server Edition GPUs. ASUS ESC8000-E12P is a high-density 4U server for eight dual-slot high-end NVIDIA H200 GPUs and support software suite on NVIDIA AI Enterprise and Omniverse. Also, it's fully compatible with NVIDIA MGX architecture to ensure flexible scalability and fast, large-scale deployment. Additionally, the ASUS ESC8000A-E13P, 4U NVIDIA MGX server, supports eight dual-slot NVIDIA H200 GPUs, provides seamless integration, optimization and scalability for modern data centers and dynamic IT environments.

Groundbreaking AI supercomputer, ASUS Ascent GX10

ASUS today also announces its groundbreaking AI supercomputer, ASUS Ascent GX10, in a compact package. Powered by the state-of-the-art NVIDIA GB10 Grace Blackwell Superchip, it delivers 1,000 AI TOPS performance, making it ideal for demanding workloads. Ascent GX10 is equipped with a Blackwell GPU, a 20-core Arm CPU, and 128GB of memory, supporting AI models with up to 200-billion parameters. This revolutionary device places the formidable capabilities of a petaflop-scale AI supercomputer directly onto the desks of developers, AI researchers and data scientists around the globe.

Edge AI computers from ASUS IoT also showcased at GTC, highlighting their advanced capabilities in AI inference at the edge. ASUS IoT PE2100N edge AI computer, powered by the NVIDIA Jetson AGX Orin system-on-modules and NVIDIA JetPack SDK, delivers up to 275 TOPS, making it ideal for generative AI, visual language models (VLM), and large language model (LLM) applications. These applications enable natural language interactions with videos and images for event identification or object detection. With low power consumption and optional 4G/5G and Out-of-Band technology support, it is perfect for smart cities, robotics, and in-vehicle solutions. Additionally, ASUS IoT PE8000G rugged edge AI GPU computer, supporting dual 450W NVIDIA RTX PCIe graphics cards, excels in real-time AI inference, pre-processing, and perception AI. With flexible 8-48V DC power input, ignition power control, and operational stability from -20°C to 60°C, the PE8000G is suited for computer vision, autonomous vehicles, intelligent video analytics, and generative AI in harsh environments.

Achieving infrastructure excellence to maximize efficiency and minimize cost

ASUS is dedicated to delivering excellence through Innovative AI Infrastructure solutions that address the rigorous demands of high-performance computing (HPC) and AI workloads. Our meticulously designed servers and storage systems enhance performance, reliability and scalability to meet diverse enterprise needs.

Our L12-ready infrastructure solutions embrace sustainable practices throughout the data center lifecycle, featuring energy-efficient power supplies, advanced cooling systems, cloud services and software applications. Choosing an ASUS server transcends mere hardware acquisition. With the self-owned software platform, ASUS Infrastructure Deployment Center (AIDC), to supports large-scale deployments – plus ASUS Control Center (ACC), designed for IT remote monitoring management, as well as the support of NVIDIA AI Enterprise and NVIDIA Omniverse — we are capable of empowering our clients to achieve seamless AI and HPC experience. This not only reduces operational costs and time but also ensures that high service levels are consistently maintained. This holistic approach effectively minimizes total cost of ownership (TCO) and power-usage effectiveness (PUE) while significantly reducing environmental impact.

With 30 years of experience in the server industry since 1995, ASUS is poised to empower clients in navigating the competitive landscape of AI. Our innovative AI-driven solutions enable dynamic workload management, ensuring that computational tasks are allocated to the most efficient resources. By leveraging our extensive technological capabilities and industry-leading expertise, ASUS is committed to supporting organizations as they tackle the complexities of AI infrastructure demands while driving innovation and sustainability.